AI Content Watermarking is shaping the future of digital content in profound ways. As we navigate the modern digital era, content creation is experiencing a seismic transformation. The catalyst behind this change is Artificial Intelligence (AI), a technological titan propelling innovative breakthroughs and reimagining the boundaries of creation. At the epicenter of this transformative wave is AI-generated content (AIGC). With its unparalleled capability to craft lifelike images and articulate coherent sentences, AIGC is heralding a new dawn in the digital revolution.

However, as the capabilities of AI continue to expand, so do the challenges associated with its widespread adoption. The rapid proliferation of AIGC has raised pressing questions about its regulation and protection. While watermarking was introduced as a potential safeguard to address these concerns, recent research suggests that even this protective measure might have vulnerabilities that need addressing.

The AI Revolution in Content Creation

With their advanced algorithms and capabilities, generative deep learning models have thrust AIGC into the spotlight, revolutionizing how we perceive digital content creation. Platforms that harness the power of these models, especially notable ones like ChatGPT and Stable Diffusion, are setting new benchmarks in the quality of content they produce, offering realism and coherence that were once thought to be exclusive to human creators.

Yet, with this surge in AI-driven content, there are growing concerns. The commercialization of AIGC and its potential for malicious misuse have drawn attention from legal and ethical standpoints. Service providers find themselves in a challenging position, ensuring users adhere to established usage policies while implementing measures to prevent exploiting this groundbreaking content.

Watermarking: A Protective Measure Under Scrutiny

Watermarking, the technique of embedding unique, imperceptible marks on content for verification and attribution, was seen as the solution. But how foolproof is it?

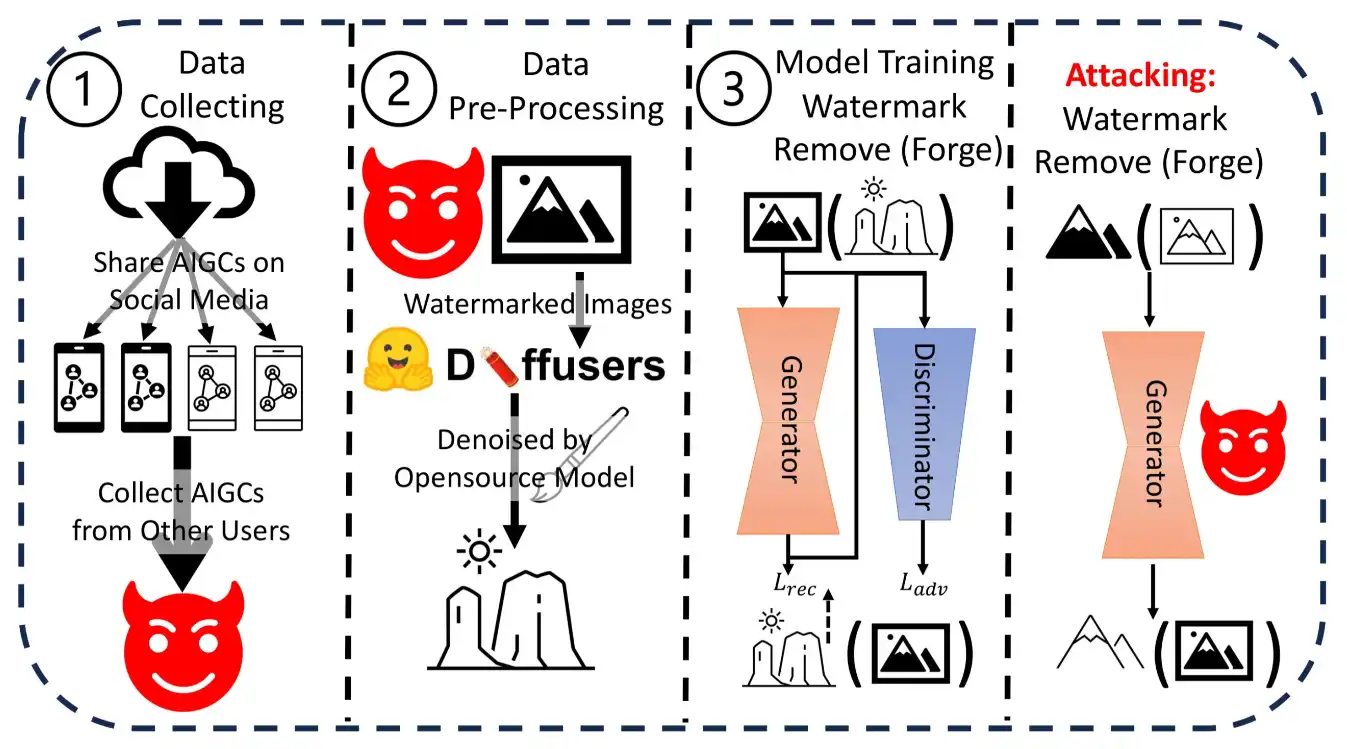

A groundbreaking study titled “Towards the Vulnerability of Watermarking Artificial Intelligence Generated Content” by a team of researchers has delved deep into this question. The paper highlights two significant vulnerabilities:

- Watermark Removal: Adversaries can erase watermarks using the content without restrictions.

- Watermark Forge: Malicious actors can create content with forged watermarks, leading to potential misattribution.

WMaGi: A New Framework on the Block

In their quest to understand and address the vulnerabilities of watermarking in AI-generated content, the researchers unveiled WMaGi. This innovative framework is a comprehensive solution tailored to tackle the identified challenges. WMaGi operates by collecting data from various sources and undergoing a meticulous purification process to ensure the data’s integrity and relevance.

Once this foundational data is established, WMaGi employs a Generative Adversarial Network (GAN), a sophisticated AI model known for its prowess in generating content. The GAN’s primary task within WMaGi is to remove existing watermarks or craftily forge new ones adeptly. The efficacy of WMaGi was underscored by its performance metrics. In comparative tests, WMaGi showcased a speed and efficiency that was thousands of times superior to other prevalent methods, a revelation that has profound implications for the future of content protection.

Diving Deeper: Related Works and Watermarking Schemes

The paper also delves into the renewed interest in generative models and their capability to produce high-quality content. Watermarking, as a strategy, has evolved. The landscape is vast from visible watermarks that humans can recognize to invisible ones decoded by algorithms. The paper critically examines post hoc and prior watermarking methods, shedding light on their potential vulnerabilities.

The Threat Landscape

The paper paints a realistic scenario where service providers use generative models to produce images embedded with user-specific watermarks. In a black-box scenario, adversaries only access the generated image, remaining oblivious to the model or watermark scheme. This presents a unique challenge, making the research findings even more significant.

Preliminaries and Contributions

The researchers formally define the watermark verification process, setting the stage for their findings. They also outline their unique contributions, emphasizing the novelty of WMaGi in the context of existing research. WMaGi doesn’t require clean data or prior knowledge of watermarking schemes, making it a groundbreaking approach.

Implications and The Road Ahead

The study’s revelations have significant implications. If watermarking isn’t as secure as believed, the misuse of AIGC could become rampant. This could lead to legal disputes, misattributions, and a potential erosion of trust in AI-generated content.

The tech community must revisit protective measures as AI continues its march forward. The vulnerabilities exposed by this study serve as a clarion call for more robust solutions to safeguard the integrity of AI-generated content.