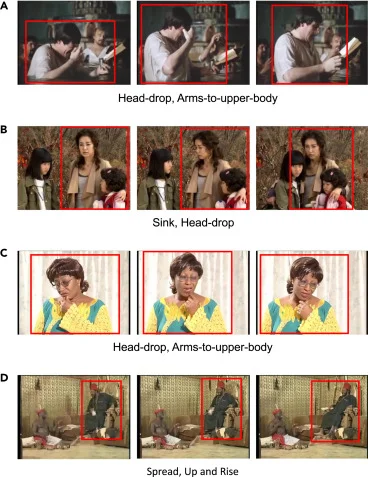

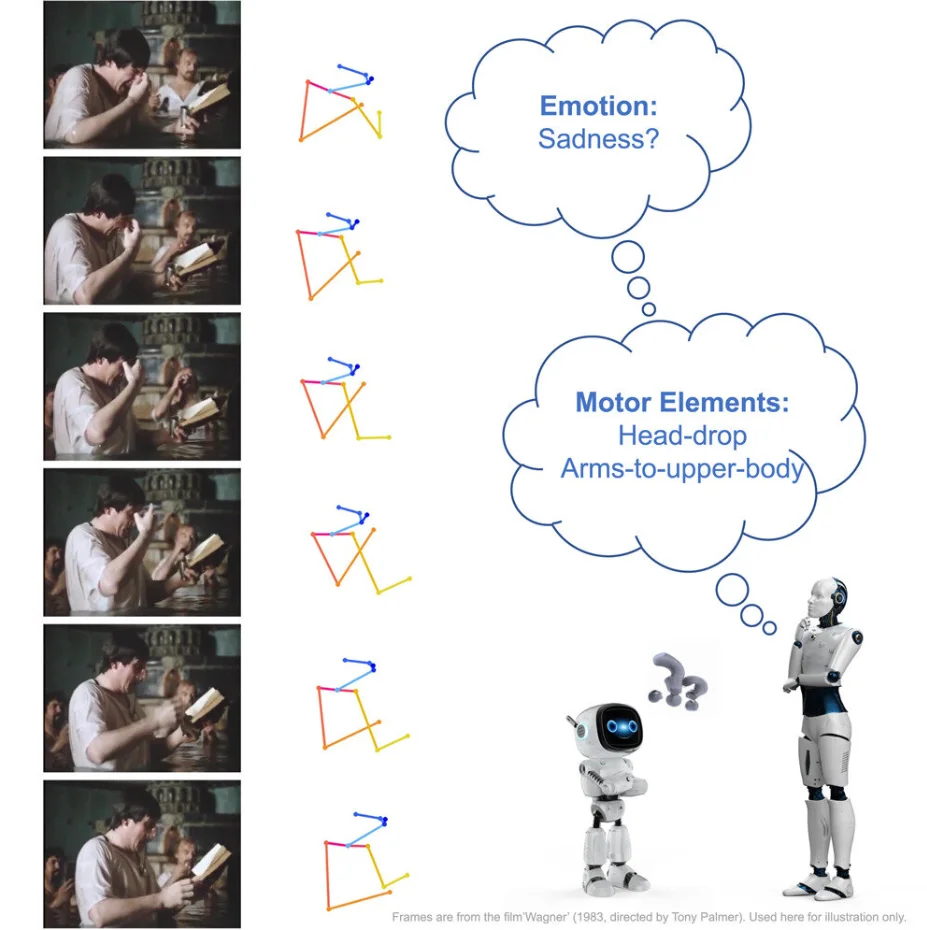

When feeling down, we might fold our hands and look down. In bouts of joy, we might thrust our fists in the air. Emotions paint body movements into self-portrayed art pieces. Recognizing these external signals might become more accessible for artificial intelligence—thanks to a combined field effort by Penn State computer scientists, psychologists, and performing art enthusiasts.

➜ The Fusion of Technology and Body Language

➜ The Potential Impact of Emotion Recognition

One of the researchers from the Penn State team expressed their excitement about the project and its potential impact. They said,

“By harnessing the universal language of body movements, we are bringing AI one step closer to understanding us better. This project opens up new horizons on how we communicate with machines and how they can respond in a way that is more human.”

➜ Envisioning Broader Applications

This project is a fantastic example of how computing, psychology, and performing arts can come together to transform our interaction with technology. By teaching AI to understand emotions through body language, we are on the verge of a new era where virtual assistants might become virtual companions, empathizing with our feelings and responding accordingly. It will be interesting to see how this field develops and where it could lead us—a topic we at NeuralWit will keenly observe.