LONDON, October 14, 2023 – The rapid advancements in Artificial Intelligence (AI) have raised a pressing question: How do we instill moral values into these systems? Researchers Reneira Seeamber and Cosmin Badea from Imperial College London have comprehensively examined this challenge in their latest publication.

Deciphering Morality in AI

The paper “If we aim to build morality into an artificial agent, how might we begin to do so?” underscores the urgency to embed morality in AI, especially those that make autonomous decisions. The duo delves into the multifaceted nature of morality, exploring its definition and the inherent challenges in the AI context.

A Philosophical Lens

The researchers anchor their exploration on three foundational philosophical theories of morality:

Virtue Ethics: This approach emphasizes individual character, advocating for developing virtues like honesty and courage.

Utilitarianism: A consequentialist theory, it promotes actions that yield the maximum happiness or pleasure for the most significant number of people.

Deontology: This theory underscores the inherent moral value of actions or rules, irrespective of their outcomes.

The Cultural Impact on Morality

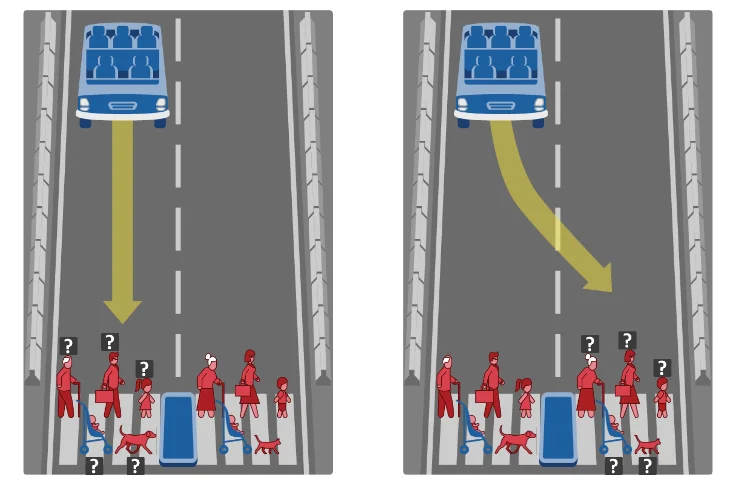

The paper sheds light on the significant role culture plays in shaping morals. Citing the Moral Machine experiment by MIT, the authors reveal how different cultural backgrounds can lead to varied moral decisions. This experiment, which offers variations of the ‘Trolley dilemma,’ underscores the challenges in creating a universally accepted ethical framework for AI.

The Role of Emotion in AI Morality

One of the paper’s intriguing debates revolves around machines’ emotional capacity (or lack thereof). Can AI, devoid of emotions, make moral decisions akin to humans? The authors reference the dual-process theory of moral judgment, which posits that humans use emotional and logical decision-making processes. This leads to the question: Does AI’s lack of emotion make it more objective, or does it hinder its moral judgment?

Pioneering a Hybrid Approach

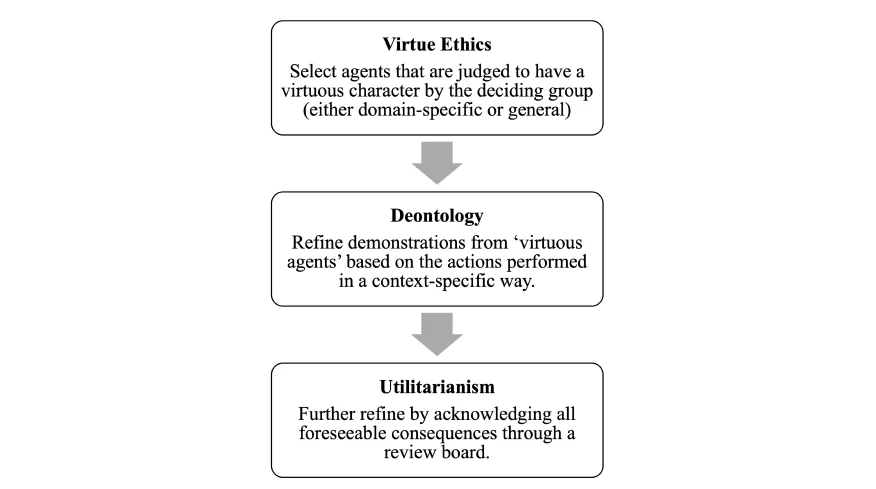

The crux of the research proposes a novel hybrid approach to AI morality. Drawing inspiration from human learning, the authors discuss the potential of inverse reinforcement learning. This method would enable AI to observe human behavior, deducing the underlying moral goals, thus offering a richer understanding of human ethics.

Challenges and Considerations

The paper doesn’t shy away from highlighting potential pitfalls. It underscores the importance of rigorous testing and simulations before AI systems are granted significant moral authority. The authors introduce the concept of keeping AI in a ‘doubt’ mode, inspired by the Hawthorne effect, which could lead to more controlled and ethical AI outcomes.

Furthermore, the paper touches upon real-world challenges, such as the potential consequences of multiple AI systems interacting, leading to unforeseen outcomes. This emphasizes the need for a higher-order framework to assess all possible AI interactions.

As AI systems become more integrated into our daily lives, the ethical considerations surrounding them gain paramount importance. The insights and proposals by Seeamber and Badea provide a roadmap for the moral evolution of AI, ensuring that as these systems grow more brilliant, they also produce more ethics.