Google DeepMind, in collaboration with UC Berkeley, MIT, and the University of Alberta, has introduced a generative AI system that simulates real-world interactions, UniSim. This groundbreaking model could redefine how we train AI systems, especially in robotics and gaming.

➜ The Genesis of UniSim

DeepMind, a subsidiary of Google, has always been at the forefront of AI research. Their latest venture, UniSim, is a testament to their commitment to pushing the boundaries of what’s possible. Developed in collaboration with UC Berkeley, MIT, and the University of Alberta, UniSim aims to be the “universal simulator of real-world interaction.” But what does that mean?

Imagine a world where robots can learn from simulations almost indistinguishable from reality. A world where game characters can be trained to interact with their environment in ways previously thought impossible. That’s the vision behind UniSim. The idea is not just to create a tool but to revolutionize how we perceive machine learning and its applications in real-world scenarios.

➜ The Vision Behind UniSim

Researchers at DeepMind and their collaborators have a clear vision for the future of generative models. In a statement that encapsulates their ambition, they expressed:

“The next milestone for generative models is to simulate realistic experience in response to actions taken by humans, robots, and other interactive agents,”

This quote underscores the team’s aspiration to create AI systems that can simulate real-world interactions with unparalleled realism, bridging the gap between virtual training and real-world application.

➜ Diving Deeper into UniSim

UniSim is not just another generative model. It’s designed to simulate the intricate dance of interactions between humans, robots, and their environment. Whether it’s a high-level command like “open the door” or a nuanced movement instruction, UniSim can replicate the outcome.

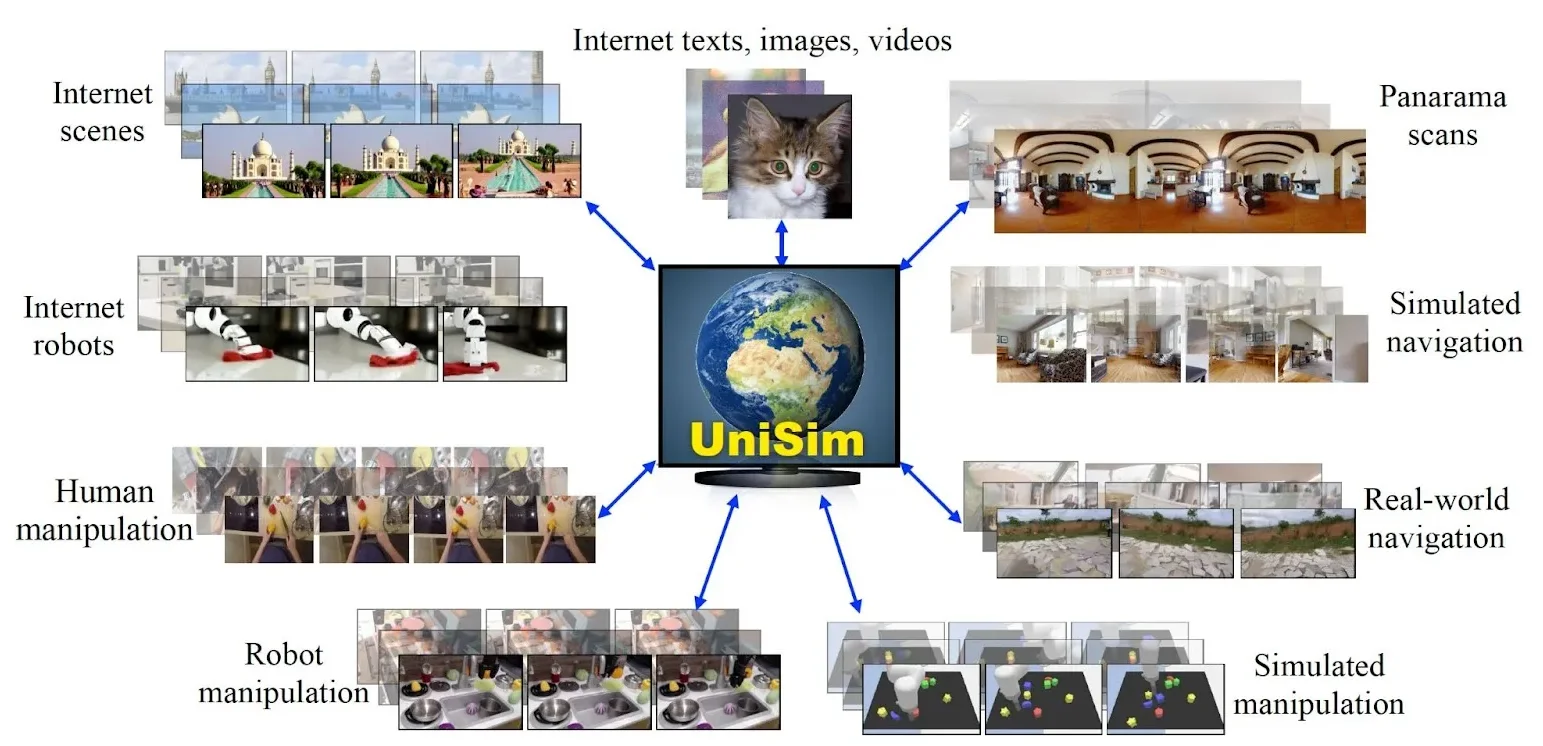

According to the researchers, the goal is to merge vast amounts of data from diverse sources into a cohesive framework. This would allow for the generation of videos that depict a wide range of actions, from human activities to robot interactions, all in photorealistic detail. The potential here is immense. Imagine training a robot without stepping into a physical environment or teaching a virtual character to interact with its surroundings just like a human would.

➜ The Challenges and Triumphs of Data Integration

One of the most significant hurdles in developing UniSim was the diverse nature of the datasets. Each dataset, whether from human activity videos, robot data, or image-description pairs, had unique challenges. The researchers had to find a way to harmonize these datasets, converting them into a unified format that UniSim could use.

But with challenges come breakthroughs. Employing transformer models and other advanced techniques, the team created a system seamlessly connecting observations, actions, and outcomes. The result? A model that can generate many photorealistic videos, simulating various actions and interactions. This achievement is akin to creating a virtual world with all its complexities and nuances that mirror our own.

➜ Bridging the Gap Between Simulation and Reality

One of the longstanding challenges in AI training is the “sim-to-real gap.” Training a model in a simulated environment is one thing, but how well does that training translate to the real world? UniSim, with its high visual fidelity, promises to narrow this gap significantly.

Video showing UniSim’s robot action simulation capabilities. The entire scene is rendered in photorealistic video and is not an accurate view. Credit: DeepMind

Models trained with UniSim have shown remarkable adaptability, transferring their learned skills to real-world settings without missing a beat. This capability could revolutionize fields like robotics, where real-world training can be expensive and risky. The implications are vast. Industries that previously shied away from AI due to the complexities of real-world training might now reconsider, given the capabilities of UniSim.

➜ The Future is Bright with UniSim

The potential applications of UniSim are vast. From revolutionizing game design to advancing robotics, the possibilities are endless. One of the most exciting prospects is training embodied agents purely in simulation, which can then be deployed in the real world. This could lead to more advanced robots, brilliant virtual assistants, and immersive gaming experiences.

Furthermore, UniSim’s ability to simulate rare events could be a game-changer for industries like autonomous vehicles. Instead of risky real-world tests, these vehicles could be trained in the safe confines of a simulation, preparing them for almost any eventuality. This reduces costs and ensures a higher level of safety, as potential issues can be identified and rectified in the virtual world before they manifest in reality.

Another potential application is in the entertainment industry. Game developers and filmmakers could use UniSim to create more realistic characters and scenarios, enhancing the user experience. Imagine a video game where characters learn and evolve based on their interactions with the environment or a movie where virtual characters are almost indistinguishable from real actors regarding their actions and reactions.

DeepMind’s UniSim is a testament to the power of collaboration and innovation. While still in its early stages, its potential impact on various industries is undeniable. As AI continues to evolve, tools like UniSim will play a pivotal role in shaping the future, making the impossible possible. The convergence of technology and reality is on the horizon, and with tools like UniSim, we are one step closer to that future.