In artificial intelligence’s vast and intricate realm, the prowess and potential of large language models (LLMs) have consistently been at the forefront of discussions and debates. With their impressive capabilities, these models have transformed how we perceive and interact with technology. However, as with any evolving technology, there are challenges to overcome. One such challenge has been the deployment of LLMs as autonomous agents. But now, a groundbreaking study from the University of Illinois at Urbana-Champaign titled “LANGUAGE AGENT TREE SEARCH UNIFIES REASONING ACTING AND PLANNING IN LANGUAGE MODELS” offers a promising solution in the form of LATS (Language Agent Tree Search).

Understanding the LATS Phenomenon

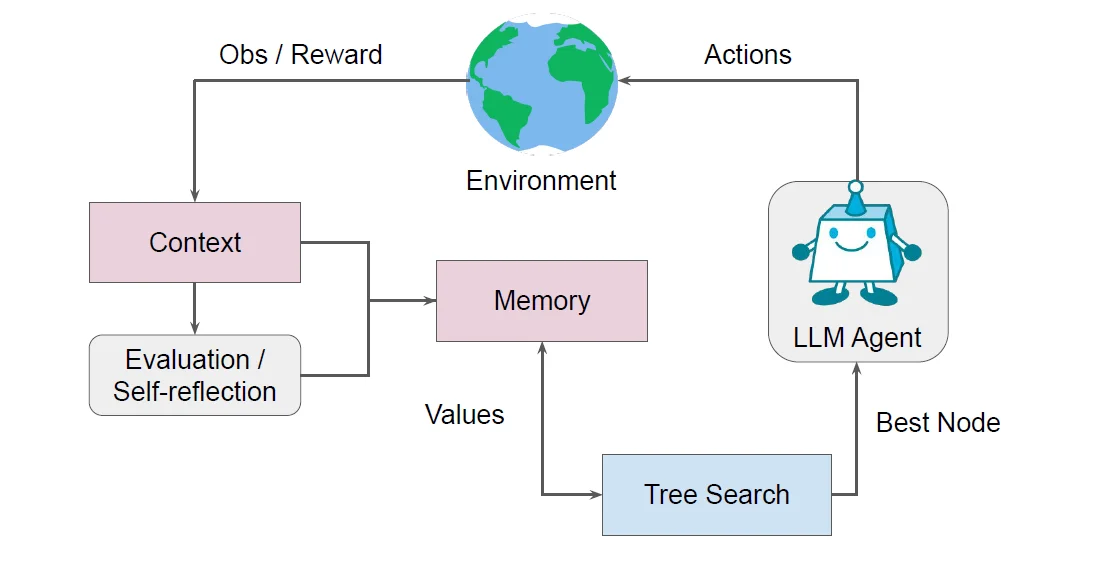

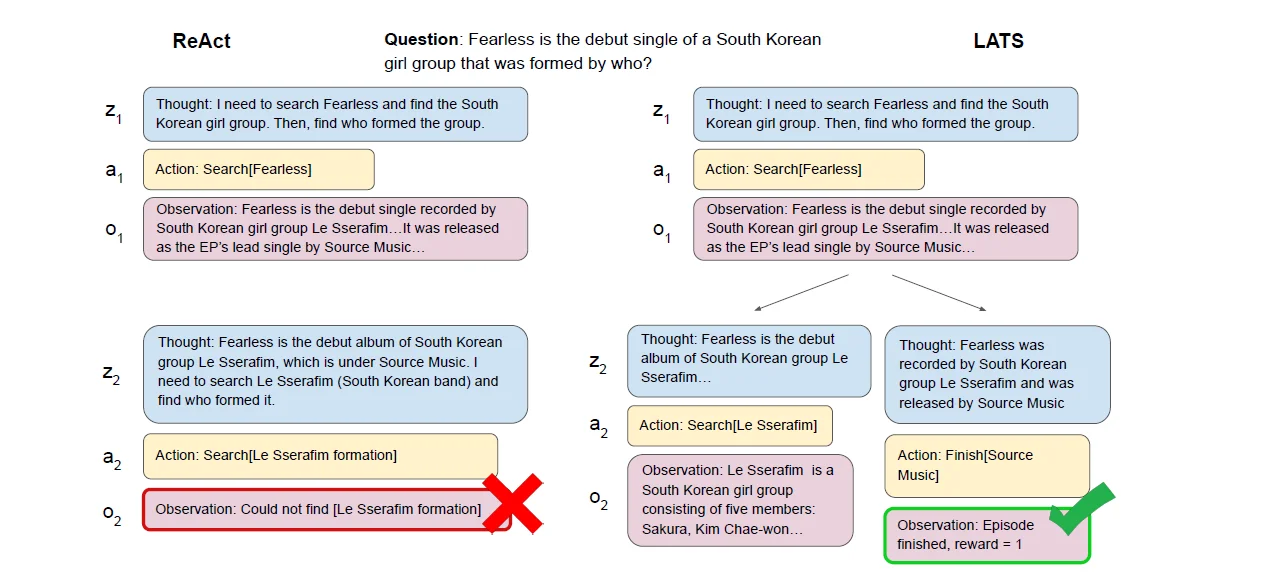

LATS is not just another framework; it’s a revolutionary approach aiming to synergize the capabilities of LLMs in planning, acting, and reasoning. Drawing inspiration from the Monte Carlo tree search, a renowned technique in model-based reinforcement learning, LATS envisions a new role for LLMs. Instead of being mere passive entities, LLMs are repurposed as active agents, value functions, and optimizers.

The Researcher’s Perspective on LATS

“What is crucial in this method is the use of an environment for external feedback, which offers a more deliberate and adaptive problem-solving mechanism that moves beyond the limitations of existing techniques.”

This feedback mechanism is invaluable in the world of AI. For instance, consider a scenario where an LLM recommends a movie. Without external feedback, the model might suggest films based solely on popularity. However, feedback allows the model to refine its recommendations based on user preferences, ensuring more personalized and accurate suggestions.

LATS’ Impressive Track Record

“Our experimental evaluation across diverse domains, such as programming, HotPotQA, and WebShop, illustrates the applicability of LATS for both reasoning and acting. In particular, LATS achieves 94.4% for programming on HumanEval with GPT-4 and an average score of 75.9 for web browsing on WebShop with GPT-3.5, demonstrating the effectiveness and generality of our method.”

For example, in the programming domain, LATS could be tasked with writing a function to sort a list of numbers. While traditional methods might struggle with edge cases or specific requirements, LATS, with its adaptive problem-solving mechanism, can refine its approach based on feedback, ensuring a more accurate and efficient solution.

| Prompt Method | Model | Pass@1 |

|---|---|---|

| CoT (Wei et al., 2022) | GPT-3.5 | 46.9 |

| Prompt Method | GPT-4 | 94.4 |

| Method | Score | SR |

|---|---|---|

| ReAct (Yao et al., 2023b) | 53.8 | 28.0 |

| Expert | 82.1 | 59.6 |

The Broader Vision for Autonomous Agents

“General autonomous agents capable of reasoning and decision-making in a variety of environments (Wooldridge & Jennings, 1995) have been of longstanding interest in the field of artificial intelligence.”

This sentiment underscores the AI community’s longstanding interest in creating autonomous agents capable of advanced reasoning and decision-making. Imagine a virtual assistant that can not only answer questions but also make decisions on behalf of the user, such as scheduling appointments or making purchases, based on past interactions and preferences.

Reflections on LATS’ Performance

“Reflection: In this attempt, I was unsuccessful. I accidentally bought a product that was $100, which is more than my budget of $30. Either way, the initial search results were not good. Next time, I will do search[”variety pack of chips”] and then check if the results meet the dairy free and the $30 budget constraints. I will continue to refine my searches so that I can find more products.”

Such reflections offer a glimpse into the agent’s reasoning capabilities and ability to learn from actions. This adaptability is akin to a shopper learning from past mistakes and refining their search criteria to find the perfect product.

The Broader Implications of LATS

“Broader impact. LATS is a framework that enhances LLM performance through interactions with an environment. This improvement in autonomous decision-making may facilitate harmful uses of LLMs. Alternatively, LATS enhances interpretability and the potential for greater alignment, as it generates understandable, high-level linguistic reasoning and actions through several rounds of decision-making and reflection, rather than relying on implicit, low-level token values.”

This statement underscores the dual-edged nature of technological advancements. While LATS can enhance the performance and interpretability of LLMs, it also brings forth ethical considerations. For instance, while LATS can be used to improve customer service chatbots, there’s also the potential for misuse in spreading misinformation or manipulating users.