In a groundbreaking study recently published, a team of researchers has unveiled a novel approach to measuring acoustics in various environments. This innovative method employs two robots that actively move within a space, emitting and receiving sound signals to map the acoustics of their surroundings. The implications of this research could revolutionize the way we understand and interact with sound in various settings.

The Science Behind the Sound

The acoustics of our environment influence every sound we hear. Whether the echo in a grand hall or the muffled tones in a carpeted room, these nuances are captured by what experts call the Room Impulse Response (RIR). Traditionally, measuring RIR has been a cumbersome process, requiring the setup of a loudspeaker and microphone in various positions within a room.

However, the team, led by Yinfeng Yu and affiliated with prestigious institutions such as Tsinghua University and Microsoft Research, has proposed a more efficient method. “As humans, we hear sound every second of our life. The sound we hear is often affected by the acoustics of the environment surrounding us,” the team states in their abstract. They further explain the inefficiencies of traditional methods and introduce their solution: two robots that measure the environment’s acoustics by actively moving and emitting/receiving signals.

Collaborative Robots in Action

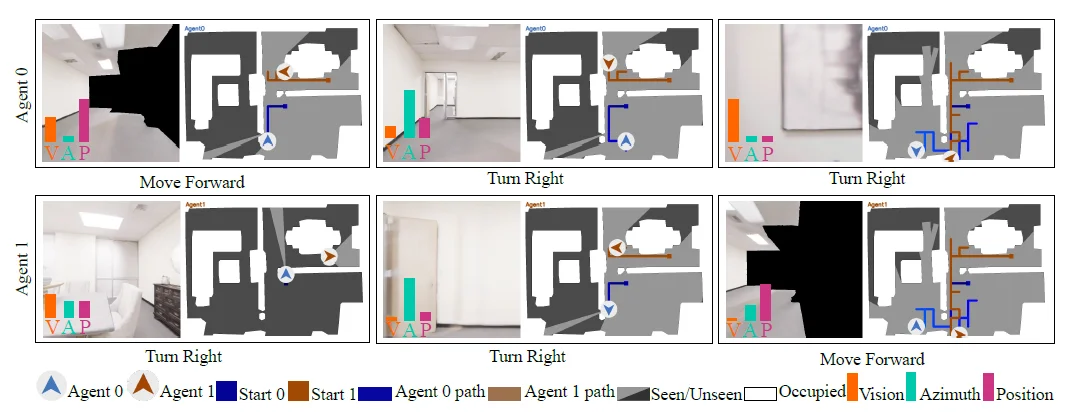

Named MACMA, the model involves two collaborative agents (robots) for acoustic measurements that move within a 3D environment. These agents utilize vision, position, and azimuth to measure RIR. They are designed to predict the following action, measure room impulse response, and evaluate the trade-off between exploration and RIR measurement. The primary objective is to predict RIR in new scenes and explore it widely and accurately.

One of the standout features of the research is the detailed illustration of how the two collaborative robots operate. The document provides a visual representation aptly titled “Learn to measure environment acoustics with two collaborative robots.” This illustration showcases the robots in action, with the background color indicating sound intensity levels ranging from high to low.

The process is broken down into three distinct steps:

- Robot 0 emits a sound, and Robot 1 receives it.

- Robot 1 emits the sound, and Robot 0 receives it.

- Both robots make a movement following their learned policies.

This sequence repeats until the maximum number of time steps is reached.

Exploration and RIR Prediction

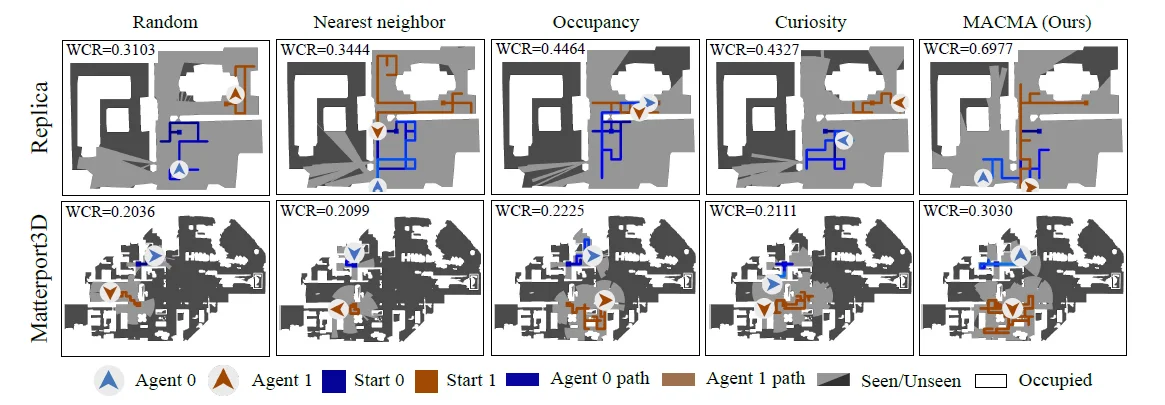

The research provides qualitative comparisons of both the exploration capability and RIR prediction. In terms of exploration, the navigation trajectories of both agents were analyzed. The results show the paths taken by the robots in different environments. The light-gray areas in this figure indicate the exploration field of the robots.

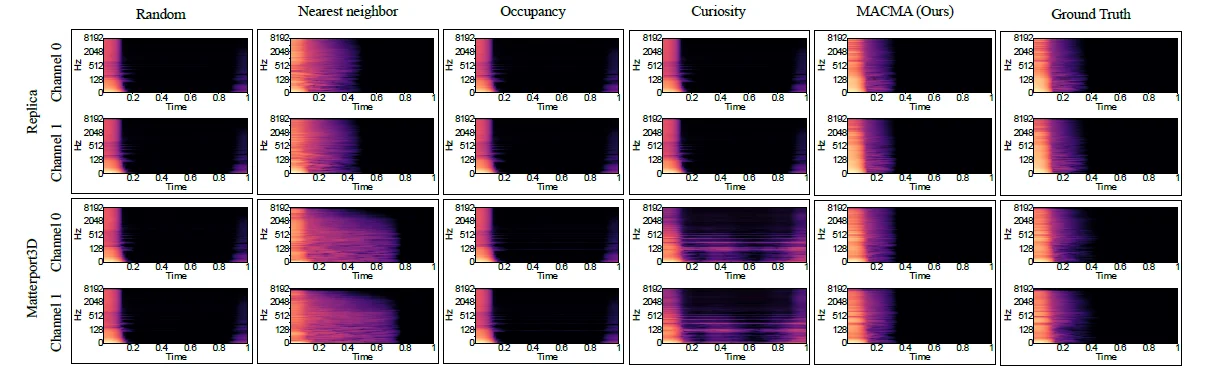

For RIR prediction, the researchers showcased spectrograms generated by their model and compared them to ground truth data. These binaural spectrograms visually represent the sound waves over a one-second duration.

Visual Features and State Features

The MACMA framework employs encoders that generate visual features. The researchers overlay the output of the optical encoder over RGB images, providing insights into the areas the encoder focuses on.

Implications and Future Applications

The potential applications of this research are vast. RIR is crucial in various fields, including sound rendering, sound source localization, and audio-visual navigation. By simplifying and enhancing the process of measuring RIR, industries can benefit from more accurate soundscapes, leading to improved user experiences in virtual reality, gaming, architectural acoustics, and more.

Moreover, the collaborative nature of the robots opens doors for further exploration in multi-agent systems. The researchers have highlighted that this is the “first problem formulation and solution for collaborative environment acoustics measurements using multiple agents.”

Looking Ahead

While the current findings are promising, the team acknowledges certain limitations. The research was conducted in a virtual environment, and real-world applications might present unforeseen challenges. The team is optimistic about future studies and is interested in “evaluating our method on real-world cases, such as a robot moving in a real house and learning to measure environmental acoustics collaboratively.”

In conclusion, this groundbreaking research offers a fresh perspective on acoustic measurements. As technology evolves, collaborative robots like MACMA might soon become the industry standard, shaping how we experience and interact with sound.

For more in-depth details on this research, readers are encouraged to access the complete study titled “Measuring Acoustics with Collaborative Multiple Agents.”