In a groundbreaking study recently published, researchers Xingsi Dong and Si Wu from the PKU-Tsinghua Center for Life Sciences, Peking University, have unveiled a novel model that captures the dynamics of human brain inference and learning. This model, known as the Hierarchical Exponential-family Energy-based (HEE) model, offers profound insights into how the brain interprets the external world.

Bayesian Brain Theory: A Recap

The Bayesian brain theory posits that our brain employs generative models to understand and interpret the external environment. This theory suggests that the brain infers the posterior distribution by sampling stochastic neuronal responses. In simpler terms, our brain continually updates its understanding of the world, much like a computer updating its data.

Introducing the HEE Model

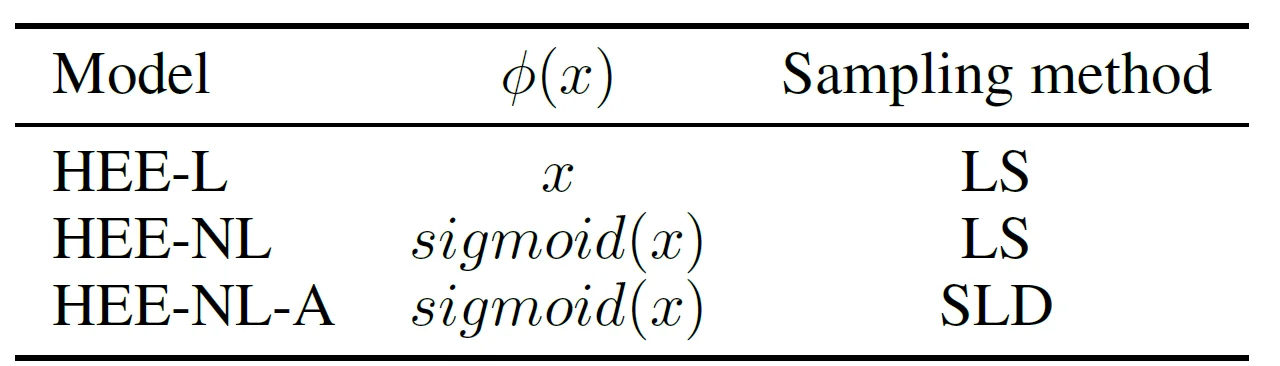

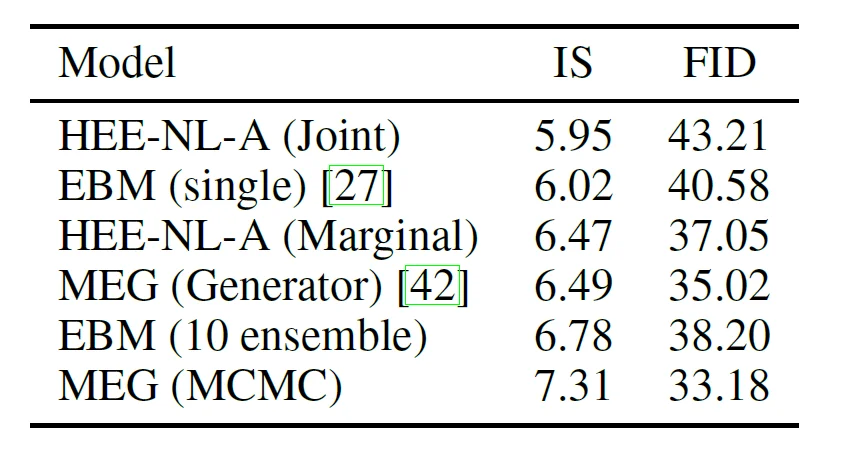

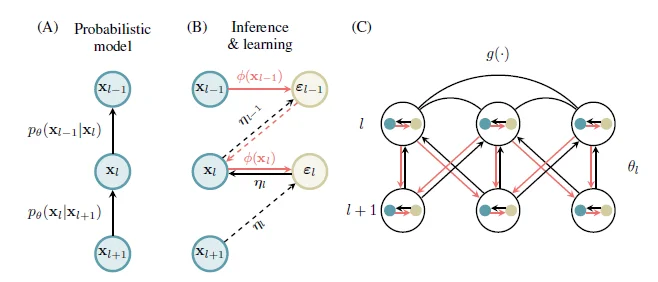

The HEE model stands out in its approach to capturing the dynamics of inference and learning. It decomposes the partition function into individual layers, leveraging a group of neurons with shorter time constants to sample the gradient of this decomposed normalization term. This unique approach allows the model to simultaneously estimate the partition function and perform inference, sidestepping challenges encountered in conventional energy-based models (EBMs).

Energy-Based Models (EBMs) and Their Challenges

EBMs have long provided a framework for inference using a sampling method and learning with spatially localized rules. However, a significant challenge with EBMs is estimating the partition function, which requires a process known as the negative phase. This process disrupts the neural network’s stored inference results, causing complications in the model’s functionality.

The HEE Model’s Innovations

The HEE model’s brilliance lies in its ability to distribute the partition function across each layer, enhancing its convergence. By efficiently sampling the normalization term in each layer using a group of neurons with fast dynamics, the model localizes the learning process in both time and space. This localization is a significant step forward, making the model more reflective of brain processes.

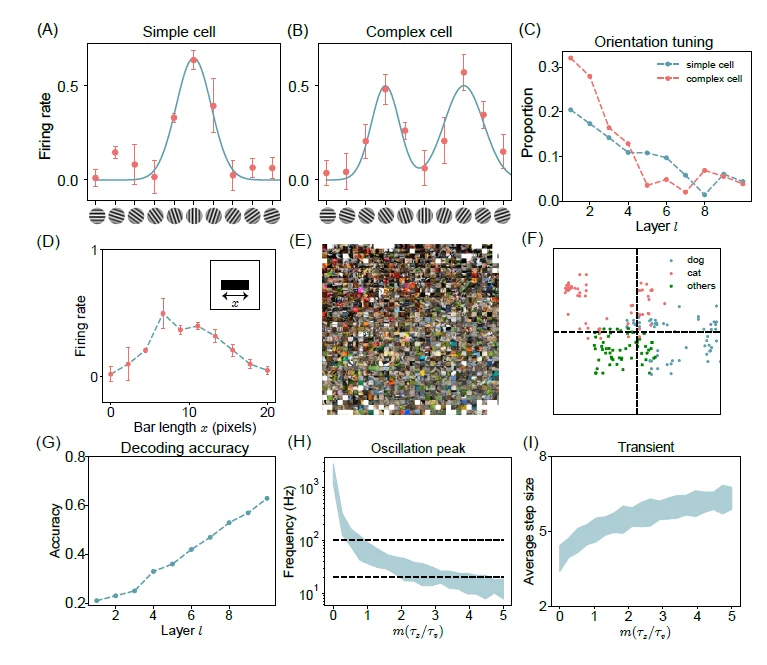

Furthermore, the researchers found that incorporating noisy adaptation into the inference dynamics results in a second-order Langevin dynamic. This discovery is pivotal as it mirrors the biological visual system’s representations, especially regarding semantic information like orientation, color, and category.

Implications for the Machine Learning Community

Beyond its neuroscientific implications, the HEE model also addresses a long-standing challenge in the machine learning community: estimating the partition function. The study suggests that the HEE model’s brain-inspired approach presents a viable technique for this estimation, bridging the gap between neuroscience and machine learning.

The Hierarchical Exponential-family Energy-based (HEE) model offers a fresh perspective on the brain’s intricate processes of inference and learning. As researchers delve deeper into the model’s capabilities and applications, it promises to revolutionize our understanding of human cognition and the potential applications in machine learning.